Open Stack is an open source private cloud computing platform developed by joint project of rack space hosting and NASA.Users mainly use it as a infrastructure as a service (Iaas). This plat form uses many services as mariadb,rabbitMQ,Linux KVM ,LVM, iscsi etc.

we have 2 nodes for the setup one is controller node (ctrl.example.com) and compute node (cmp.example.com). Both servers are configured with two nic cards where one is for external connectivity and other is for internal connectivity . The ip addresses are below

1. ctrl.example.com - 192.168.2.14 for enp0s3 (nated with external network) and enp0s8 ( for internal connectivity without ip address)

2.cmd.example.com - 192.168.2.15 for enp0s3(nated with external network) and enp0s8(for internal connectivity without ip address)

We are installing the openstack in ctrl node and below are the prerequisites

- change the selinux to permissive or disable in both the nodes

[root@ctrl ~]# getenforce

Permissive

- Disable the firewall in both the nodes

[root@ctrl ~]# systemctl status firewalld

firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

- Configure the ipaddress in both nodes and both interfaces

[root@ctrl ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:cf:f6:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.2.14/24 brd 192.168.2.255 scope global dynamic eth0

valid_lft 42065sec preferred_lft 42065sec

inet6 fe80::5054:ff:fecf:f6ef/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:c6:92:ee brd ff:ff:ff:ff:ff:ff

[root@cmp ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:4d:fa:06 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.15/24 brd 192.168.2.255 scope global dynamic eth0

valid_lft 42039sec preferred_lft 42039sec

inet6 fe80::5054:ff:fe4d:fa06/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:fe:a6:c8 brd ff:ff:ff:ff:ff:ff

********************************************************************************

- Map the hostname & ip to the /etc/hosts file

[root@ctrl ~]# cat /etc/hosts

192.168.2.14 ctrl.example.com

192.168.2.15 cmp.example.com

- Update the system in both the nodes

- create the repository

- install the openstack packstack in ctrl node

- Now we need to configure the openstack using packstack automated installation for that first we need to create the answer file which contains all configuration parameters in ctrl node

[root@ctrl ~]# packstack --gen-answer-file=/root/answers.txt

Packstack changed given value to required value /root/.ssh/id_rsa.pub

[root@ctrl ~]# cd /root/

[root@ctrl ~]# ll answers.txt

-rw-------. 1 root root 52366 Oct 5 02:05 answers.txt

- Configure the below parameters in /root/answers.txt maually ( you may need to edit the values)

CONFIG_NTP_SERVERS=0.pool.ntp.org,1.pool.ntp.org,2.pool.ntp.org

CONFIG_CONTROLLER_HOST=192.168.2.14

CONFIG_COMPUTE_HOSTS=192.168.2.15

CONFIG_KEYSTONE_ADMIN_PW=password

CONFIG_NEUTRON_ML2_MECHANISM_DRIVERS=openvswitch

CONFIG_NEUTRON_ML2_TYPE_DRIVERS=vlan

CONFIG_NEUTRON_ML2_TENANT_NETWORK_TYPES=vlan

CONFIG_NEUTRON_ML2_VLAN_RANGES=physnet1:1000:2000

CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS=physnet:br-ex

- Now start the installation using that answer file and it may take some time (more than 1 hour)

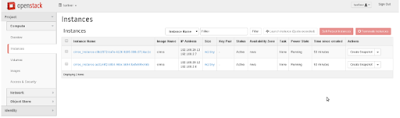

- Once the installation is completed you will get the link for the Horizon dash board

**** Installation completed successfully ******

Additional information:

* File /root/keystonerc_admin has been created on OpenStack client host 192.168.2.14. To use the command line tools you need to source the file.

* To access the OpenStack Dashboard browse to http://192.168.2.14/dashboard .

Please, find your login credentials stored in the keystonerc_admin in your home directory.

* Because of the kernel update the host 192.168.2.14 requires reboot.

* The installation log file is available at: /var/tmp/packstack/20160320-230116-mT1aV6/openstack-setup.log

* The generated manifests are available at: /var/tmp/packstack/20160320-230116-mT1aV6/manifests

- The credentials which is used to login in the Horizon will be stored in /root/keystonrc file. In our case it will be admin/password

- Now we have to create openswitch bridges and bind to the physical interfaces . After the openstack installation below are the interface details (we can see extra openswitch interfaces are added )

[root@ctrl ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:cf:f6:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.2.14/24 brd 192.168.2.255 scope global dynamic eth0

valid_lft 41134sec preferred_lft 41134sec

inet6 fe80::5054:ff:fecf:f6ef/64 scope link

valid_lft forever preferred_lft forever

3: enp0s81: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:c6:92:ee brd ff:ff:ff:ff:ff:ff

4: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether 72:b8:b8:de:3a:f7 brd ff:ff:ff:ff:ff:ff

5: br-int: mtu 1500 qdisc noqueue state UNKNOWN

link/ether 0e:f7:ad:b9:21:48 brd ff:ff:ff:ff:ff:ff

inet6 fe80::cf7:adff:feb9:2148/64 scope link

valid_lft forever preferred_lft forever

6: br-enp0s8: mtu 1500 qdisc noqueue state UNKNOWN

link/ether f2:d0:68:22:b2:46 brd ff:ff:ff:ff:ff:ff

inet6 fe80::f0d0:68ff:fe22:b246/64 scope link

valid_lft forever preferred_lft forever

7: br-ex: mtu 1500 qdisc noqueue state UNKNOWN

link/ether 76:7a:de:52:ec:42 brd ff:ff:ff:ff:ff:ff

inet6 fe80::747a:deff:fe52:ec42/64 scope link

valid_lft forever preferred_lft forever

[root@cmp ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:4d:fa:06 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.15/24 brd 192.168.2.255 scope global dynamic eth0

valid_lft 40548sec preferred_lft 40548sec

inet6 fe80::5054:ff:fe4d:fa06/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:fe:a6:c8 brd ff:ff:ff:ff:ff:ff

6: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether 2e:11:a9:be:7b:cc brd ff:ff:ff:ff:ff:ff

7: br-int: mtu 1500 qdisc noqueue state UNKNOWN

link/ether a2:b9:7e:04:cd:48 brd ff:ff:ff:ff:ff:ff

inet6 fe80::a0b9:7eff:fe04:cd48/64 scope link

valid_lft forever preferred_lft forever

8: br-enp0s8: mtu 1500 qdisc noqueue state UNKNOWN

link/ether 36:8c:69:06:42:4b brd ff:ff:ff:ff:ff:ff

inet6 fe80::348c:69ff:fe06:424b/64 scope link

valid_lft forever preferred_lft forever

- Take the configuration back up of original interfaces in ctrl node

cp /etc/sysconfig/network-scripts/ifcfg-enp0s3 /root/ifcfg-enp0s3.backup

cp /etc/sysconfig/network-scripts/ifcfg-enp0s3 /etc/sysconfig/network-scripts/ifcfg-br-ex

cp /etc/sysconfig/network-scripts/ifcfg-enp0s8 /root/ifcfg-enp0s8.backup

- Modify the ifcfg-enp0s3 file and update as below in ctrl node

DEVICE=enp0s3

HWADDR=52:54:00:CF:F6:EF

ONBOOT=yes

- Modify the ifcfg-br-ex file as below

DEVICE=br-ex

TYPE=Ethernet

BOOTPROTO=static

ONBOOT=yes

NM_CONTROLLED=no

IPADDR=192.168.2.14

PREFIX=24

- Modify ifcfg-enp0s8 file as below in ctrl node

DEVICE=enp0s8

HWADDR=52:54:00:C6:92:EE

TYPE=Ethernet

BOOTPROTO=none

NM_CONTROLLED=no

ONBOOT=yes

- Now its the time to add both the ports to ovs switch using below command

ovs-vsctl add-port br-ex enp0s3; systemctl restart network

ovs-vsctl add-port br-enp0s8 enp0s8; systemctl restart network

The network interface structure is given below after adding the ports

[root@ctrl ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:cf:f6:ef brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fecf:f6ef/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: mtu 1500 qdisc pfifo_fast master ovs-system state UP qlen 1000

link/ether 52:54:00:c6:92:ee brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fec6:92ee/64 scope link

valid_lft forever preferred_lft forever

4: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether ea:c6:b3:ff:17:ba brd ff:ff:ff:ff:ff:ff

5: br-enp0s8: mtu 1500 qdisc noop state DOWN

link/ether f2:d0:68:22:b2:46 brd ff:ff:ff:ff:ff:ff

6: br-ex: mtu 1500 qdisc noqueue state UNKNOWN

link/ether 76:7a:de:52:ec:42 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.14/24 brd 192.168.2.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::747a:deff:fe52:ec42/64 scope link

valid_lft forever preferred_lft forever

7: br-int: mtu 1500 qdisc noop state DOWN

link/ether 0e:f7:ad:b9:21:48 brd ff:ff:ff:ff:ff:ff

Now we can check the ovs configuration in details

[root@ctrl ~]# ovs-vsctl show

0dcba8a0-bebe-4785-82d6-7c67619874cd

Bridge "br-enp0s8"

Port "phy-br-enp0s8"

Interface "phy-br-enp0s8"

type: patch

options: {peer="int-br-enp0s8"}

Port "enp0s8"

Interface "enp0s8"

Port "br-enp0s8"

Interface "br-enps8"

type: internal

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Port "enp0s3"

Interface "enp0s3"

Bridge br-int

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port "int-br-enp0s8"

Interface "int-br-enp0s8"

type: patch

options: {peer="phy-br-enp0s8"}

ovs_version: "2.1.3"

- Let us configure the same in computer node. Modify the ifcfg-enp0s8 interface as below in computer node

DEVICE=enp0s8

HWADDR=52:54:00:FE:A6:C8

TYPE=Ethernet

BOOTPROTO=none

NM_CONTROLLED=no

ONBOOT=yes

Add the interface to ovs switch using below command in comp node

ovs-vsctl add-port br-enp0s8 enp0s8; systemctl restart network

- Finally the configuration in computer node will look like below

[root@cmp ~]# ovs-vsctl show

cc9e8eff-ea10-40dc-adeb-2d6ee6fc9ed9

Bridge br-int

fail_mode: secure

Port "int-br-enp0s8"

Interface "int-br-enp0s8"

type: patch

options: {peer="phy-br-enp0s8"}

Port br-int

Interface br-int

type: internal

Bridge "br-enp0s8"

Port "phy-br-enp0s8"

Interface "phy-br-enp0s8"

type: patch

options: {peer="int-br-enp0s8"}

Port "enp0s8"

Interface "enp0s8"

Port "br-enp0s8"

Interface "br-enp0s8"

type: internal

ovs_version: "2.1.3"

- Each openstack installation will create /root/keystonerc_admin file in each nodes and same will be look like below in ctrl node

[root@ctrl ~]# cat /root/keystonerc_admin

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=password

export OS_AUTH_URL=http://192.168.2.14:5000/v2.0/

export OS_REGION_NAME=RegionOne

export PS1='[\u@\h \W(keystone_admin)]\$ '

- We can sync these variables to OS variables using below method

[root@ctrl ~]# source /root/keystonerc_admin

[root@ctrl ~(keystone_admin)]#

- Now check the service status in ctrl node to make sure all services are running fine

[root@ctrl ~(keystone_admin)]# openstack-status

== Nova services ==

openstack-nova-api: active

openstack-nova-cert: active

openstack-nova-compute: inactive (disabled on boot)

openstack-nova-network: inactive (disabled on boot)

openstack-nova-scheduler: active

openstack-nova-conductor: active

== Glance services ==

openstack-glance-api: active

...

- Also verify the services in cloud hosts ( execute in ctrl node)

[root@ctrl ~(keystone_admin)]# nova-manage service list

Binary Host Zone Status State Updated_At

nova-consoleauth controller internal enabled  2017-10-09 22:27:24

nova-scheduler controller internal enabled

2017-10-09 22:27:24

nova-scheduler controller internal enabled  2017-10-09 22:27:25

nova-conductor controller internal enabled

2017-10-09 22:27:25

nova-conductor controller internal enabled  2017-10-09 22:27:24

nova-cert controller internal enabled

2017-10-09 22:27:24

nova-cert controller internal enabled  2017-10-09 22:27:21

nova-compute compute nova enabled

2017-10-09 22:27:21

nova-compute compute nova enabled  2017-10-09 22:27:24

2017-10-09 22:27:24

The basic installation of the Open stack in Centos 7 is completed