Below are the main points for securing Azure Devops

1. Managing users and groups using Role-Based Access Control (RBAC) to define and enforce granular permissions.

Role-Based Access Control (RBAC) is a method of managing user access and permissionsbased on their roles within an organization. It helps maintain security by ensuring that users

only have access to the resources and operations relevant to their job responsibilities. In

Azure DevOps, you can use RBAC to assign appropriate permissions to users and groups.

2. Applying the principle of least privilege for granting permissions to minimize potential risks.

The principle of least privilege (PoLP) is a security best practice that involves granting users only the minimum permissions they need to perform their job duties. By applying this principle, you can reduce the risk of unauthorized access, data breaches, and other security incidents.

3. Regularly reviewing user accounts and disabling unnecessary accounts to reduce the attack surface.

Regularly reviewing user accounts and disabling unnecessary accounts is essential to maintain a secure environment in Azure DevOps. By keeping user accounts up to date and removing unused or inactive accounts, you can minimize the risk of unauthorized access and data breaches

4. Implementing strong authentication with Multi-Factor Authentication (MFA) to protect against unauthorized access.

Implementing strong authentication with Multi-Factor Authentication (MFA) is a critical security measure that helps protect your Azure DevOps environment from unauthorized access. MFA requires users to provide at least two forms of verification before granting access, making it much more difficult for attackers to compromise user accounts

5. Integrating centralized identity management using Single Sign-On (SSO) and Azure Active Directory.

Providing centralized identity management using Single Sign-On (SSO) and Azure Active Directory (Azure AD) integration simplifies access control and enhances security in Azure DevOps. SSO allows users to authenticate once and access multiple applications, while Azure AD integration enables centralized management of user accounts and permissions

6. Reducing authentication risks using risk-based policies and Azure AD Identity Protection integration.

Reducing authentication risks with risk-based policies and Azure AD Identity Protectionhelps enhance security in Azure DevOps by detecting and responding to potential threats in real-time. Risk-based policies evaluate user behavior and other factors to identify potential security risks, while Azure AD Identity Protection leverages machine learning algorithms to detect suspicious activities

7. Restricting access with IP-based network security groups and private networks.

Restricting access using IP-based network security groups and private networks helps enhance security in Azure DevOps by limiting access to your resources based on specific IP addresses or address ranges. This approach can help prevent unauthorized access and reduce the attack surface of your environment.

8. Establishing secure communication with on-premises systems using VPN or ExpressRoute.

Establishing secure communication with on-premises systems using VPN or ExpressRoute is essential when you need to integrate Azure DevOps with your existing infrastructure. Both options allow you to create private connections between your on-premises network and Azure, ensuring secure data transfer and reducing exposure to the public internet

9. Protecting and routing network traffic with Azure DDoS Protection and Azure Firewall.

Protecting and routing network traffic with Azure DDoS Protection and Azure Firewall enhances the security of your Azure DevOps environment by safeguarding against Distributed Denial of Service (DDoS) attacks and filtering network traffic based on specific rules.

10. Applying code review processes and utilizing static and dynamic code analysis tools for vulnerability detection.

Applying code review processes to detect security vulnerabilities is essential for ensuring the security and reliability of your Azure DevOps projects. Code reviews help identify potential issues early in the development process, reducing the risk of security breaches and improving overall code quality

11. Establishing secure coding standards and ensuring dependency security.

Using static and dynamic code analysis tools for automatic detection of vulnerabilities is a crucial part of ensuring the security of your Azure DevOps projects. These tools can help identify potential issues early in the development process, reducing the risk of security breaches and improving overall code quality. Select suitable static application security testing (SAST) and dynamic application security testing (DAST) tools based on your organization's requirements, programming languages, and frameworks. Examples of SAST tools include SonarQube, Fortify, and Checkmarx, while examples of DAST tools include OWASP ZAP, Burp Suite, and Arachni.

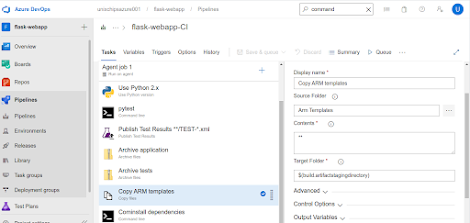

12. Incorporating security controls and automated tests in Build and Release pipelines.

Adding security controls and automated tests in Build and Release pipelines can help improve the security of your Azure DevOps projects by identifying and addressing vulnerabilities early in the development process. Integrating security checks into your pipelines ensures that security is an integral part of your software development lifecycle.

13. Securing agents with trusted agent pools and implementing Git branch policies and pull request reviews for code security.

Securing agents by using trusted agent pools is essential to ensure the integrity and security of your build and release processes in Azure DevOps. Trusted agent pools help minimize the risk of unauthorized access or tampering with your build and release pipelines

14. Storing credentials, certificates, and access keys securely in Azure Key Vault and configuring access for Azure DevOps pipelines.

Securely storing credentials, certificates, and access keys in Azure Key Vault is crucial for protecting sensitive information and maintaining the security of your Azure DevOps projects. Azure Key Vault helps centralize and manage secrets, making it easier to implement secure access controls and monitor usage

15. Monitoring changes using Azure DevOps audit logs for security, compliance, and operational awareness.

Monitoring changes using Azure DevOps audit logs is essential for maintaining security, compliance, and operational awareness in your DevOps environment. Audit logs provide visibility into activities and changes within your Azure DevOps projects, enabling you to track user behavior, identify potential security issues, and troubleshoot problems

16. Continuously tracking and improving security posture with Azure Policy and Azure Security Center

Continuously tracking and improving your security posture with Azure Policy and Azure Security Center is essential for ensuring the ongoing security and compliance of your Azure DevOps environment. These tools help you define, monitor, and enforce security policies across your Azure resources, providing a comprehensive view of your security posture and facilitating continuous improvement.

17. Conducting internal and external security audits and penetration tests for evaluation and continuous improvement.

Performing internal and external security audits and penetration tests is essential for evaluating the security of your Azure DevOps environment and identifying potential vulnerabilities. Regular audits and tests help you uncover security weaknesses, validate existing security controls, and prioritize remediation efforts

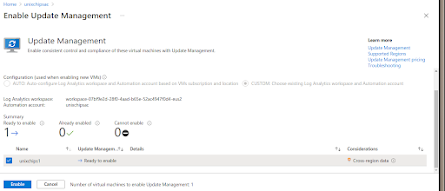

18. Regularly review and update the security configurations of your Azure DevOps services, resources, and tools.

Regularly reviewing and updating the security configurations of your Azure DevOps services, resources, and tools is an essential practice to maintain a secure environment and address evolving threats.

19. Implement secure baselines for your Azure resources and enforce them consistently across your environment.

Implementing secure baselines for your Azure resources and enforcing them consistently across your environment is crucial to maintaining a secure and compliant Azure DevOps setup

20. Use Azure Policy to define and enforce security configurations across your Azure resources.

Using Azure Policy to define and enforce security configurations across your Azure resources is a crucial part of maintaining a secure and compliant environment

21. Continuously monitor configuration changes and assess their impact on your security posture.

Continuously monitoring configuration changes and assessing their impact on your security posture is vital for maintaining a secure environment and addressing potential risks in a timely manner

22. Implement a robust backup and recovery strategy for your critical data, including source code, artifacts, and configuration data.

Implementing a robust backup and recovery strategy for your critical data, including sourcecode, artifacts, and configuration data, is essential for ensuring business continuity andreducing the impact of data loss or corruption. The main points to consider for this are identify critical data, define backup frequency and retention policies, choose appropriate backup methods, use of azure native backup solutions, store backups offsite/multiple locations, encrypt backups etc

23. Use Azure Backup and Azure Site Recovery to protect your data and applications.

Using Azure Backup and Azure Site Recovery to protect your data and applications is an effective way to ensure business continuity and minimize downtime in the event of data loss or disasters.

24. Regularly test your data recovery processes to ensure they are effective and up to date.

Regularly testing your data recovery processes to ensure they are effective and up to date is crucial for maintaining business continuity and reducing the impact of data loss or corruption. The main points to consider here is Develop a testing schedule, Test various recovery scenarios, Document test results, Update recovery plans, Train and educate your teams, Review and update testing processes.By regularly testing your data recovery processes, you can ensure they are effective and up to date, helping to maintain business continuity and minimize the impact of data loss or corruption. This proactive approach also supports a culture of continuous improvement and collaboration across teams and helps protect your organization's assets.

25. Establish a disaster recovery plan to minimize downtime and data loss in case of a security breach or system failure.

Establishing a disaster recovery plan is essential to minimize downtime and data loss in case of a security breach or system failure. Main points to be considered here is Identify critical systems and assets, Define recovery objectives, Develop recovery strategies, Document recovery procedures, Test and validate the plan, Train and educate your teams, Review and update the plan. By establishing a disaster recovery plan, you can minimize downtime and data loss in case of a security breach or system failure, helping to maintain business continuity and protect your organization's assets. This proactive approach also supports a culture of continuous improvement and collaboration across teams.

26. Maintain an up-to-date inventory of all Azure DevOps resources, including repositories, pipelines, environments, and tools.

Maintaining an up-to-date inventory of all Azure DevOps resources, including repositories, pipelines, environments, and tools, is crucial for managing and securing your organization's assets effectively. Main points to be considered here is Create a centralized inventory, Include relevant metadata, Implement a tagging strategy, Automate inventory updates,Regularly review and audit your inventory, Integrate with other asset management systems. By maintaining an up-to-date inventory of all Azure DevOps resources, you can better manage and secure your organization's assets, track changes, and enforce access control policies. This proactive approach helps protect your organization's assets and fosters a culture of continuous improvement and collaboration across teams.

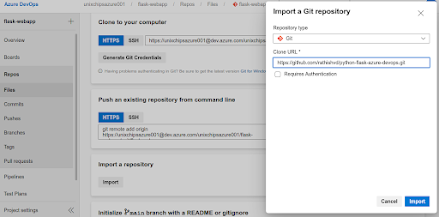

27. Use Azure Resource Manager (ARM) templates to manage your Azure resources in a consistent and automated manner.

Using Azure Resource Manager (ARM) templates to manage your Azure resources in a consistent and automated manner is an important best practice for managing infrastructure as code. Main points to be considered here are Standardize resource configurations, Improve collaboration and version control, Automate resource provisioning and updates, Simplify resource management, Validate and test templates, Reuse and share templates. By using Azure Resource Manager (ARM) templates to manage your Azure resources in a consistent and automated manner, you can improve collaboration, simplify resource management, and reduce the potential for human error and inconsistencies. This approach also supports a culture of continuous improvement and collaboration across teams, helping to protect your organization's assets and streamline operations

28. Implement tagging strategies to categorize your Azure resources based on project, team, or other relevant attributes.

Implementing tagging strategies to categorize your Azure resources based on project, team, or other relevant attributes is an essential practice for effective resource management and organization. Main points to be considered here is Define a consistent tagging strategy, Use meaningful and descriptive tags, Enforce tag usage, Monitor and audit tag usage, Update and maintain your tagging strategy, Use tags for cost management and reporting.By implementing tagging strategies to categorize your Azure resources based on project, team, or other relevant attributes, you can improve resource management, organization, and cost allocation. This approach also promotes a culture of collaboration and shared responsibility across teams, helping to protect your organization's assets and streamline operations.

29. Continuously monitor your inventory and resources for any unauthorized changes or access.

Continuously monitoring your inventory and resources for any unauthorized changes or access is crucial for maintaining the security and integrity of your Azure DevOps environment. The main points to be considered here are Use Azure Monitor, Review Azure DevOps audit logs, Implement Azure Security Center, Configure Azure Active Directory (AD) monitoring, Set up intrusion detection and prevention systems, Regularly audit access control and permissions. Use automated tools for monitoring. By continuously monitoring your inventory and resources for unauthorized changes or access, you can proactively detect potential security issues and respond quickly to mitigate risks. This approach helps maintain the security and integrity of your Azure DevOps environment and fosters a culture of shared responsibility and vigilance across your organization.

In conclusion, adopting a comprehensive security approach when using Azure DevOps is crucial for protecting your organization's assets and ensuring the integrity of your development and deployment processes. By following the guidelines outlined above, you can effectively manage access control, authentication, network security, code security, Azure Key Vault usage, and regular auditing to maintain a secure environment.