1. As per the above diagram first we have to check whether the pods are in pending state or not using the command

PS /home/unixchips> kubectl get pods

NAME READY STATUS RESTARTS AGE

webfrontend-5dc5464686-c7ncf 1/1 Running 0 18h

webfrontend-5dc5464686-ccrj2 1/1 Running 0 4d5h

webfrontend-5dc5464686-gjmwp 1/1 Running 0 18h

2. if the pods are in pending mode check the logs as below , so any error is related to the cluster status will be reflected on below output

*********************************************************************

PS /home/unixchips> kubectl describe pod webfrontend-5dc5464686-c7ncf

Name: webfrontend-5dc5464686-c7ncf

Namespace: default

Priority: 0

Node: aks-agentpool-54305753-vmss000000/10.240.0.4

Start Time: Mon, 14 Jun 2021 19:29:16 +0000

Labels: app.kubernetes.io/instance=webfrontend

app.kubernetes.io/name=webfrontend

pod-template-hash=5dc5464686

Annotations: <none>

Status: Running

IP: 10.240.0.107

IPs:

IP: 10.240.0.107

Controlled By: ReplicaSet/webfrontend-5dc5464686

Containers:

webfrontend:

Container ID: containerd://9bba1ea0024a1e44d8d1d760985541365c48bc656e31214e06d7d68ae8905819

Image: unixchipsacr1.azurecr.io/webfrontend:v1

Image ID: unixchipsacr1.azurecr.io/webfrontend@sha256:156eeb3ef36728fbfb914591a852ab890c6eff297a463ef26d2781c373957f3e

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 14 Jun 2021 19:29:17 +0000

Ready: True

Restart Count: 0

Liveness: http-get http://:http/ delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get http://:http/ delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from webfrontend-token-92dz6 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

webfrontend-token-92dz6:

Type: Secret (a volume populated by a Secret)

SecretName: webfrontend-token-92dz6

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

3. If the cluster is full and you want to increase the cluster size you can scale up the nodes manually using kubectl scale command as below

if you want to add extra worker nodes to the existing cluster you can use the steps as below using kubeadm command

sudo kubeadm join \ 192.168.122.195:6443 \ --token nx1jjq.u42y27ip3bhmj8vj \ --discovery-token-ca-cert-hash sha256:c6de85f6c862c0d58cc3d10fd199064ff25c4021b6e88475822d6163a25b4a6c

Detailed steps are given below

https://computingforgeeks.com/join-new-kubernetes-worker-node-to-existing-cluster/

4. If the pods are not running or not in the pending state we may need to check the application related logs . if the ports are not listening correctly or any application related issues should be reflected here ..

PS /home/unixchips> kubectl logs webfrontend-5dc5464686-c7ncf

Listening on port 80

PS /home/unixchips> kubectl logs webfrontend-5dc5464686-ccrj2 --previous

Error from server (BadRequest): previous terminated container "webfrontend" in pod "webfrontend-5dc5464686-ccrj2" not found

5. If the pods are not ready and the readiness probe failing we may need to increase the InitialDelaySeconds in the deployments.yml inside the /template folder for helm installation

PS /home/unixchips> kubectl describe pod webfrontend-5dc5464686-c7ncf | grep -i readiness

Readiness: http-get http://:http/ delay=0s timeout=1s period=10s #success=1 #failure=3

ports:

- name: http

containerPort: 80

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

InitialDelayseconds: 20

6. If "kubectl describe webfrontend-5dc5464686-c7ncf" will give error related to quota limits we have to increase the quota limits by updating the limit range config for the pod's

**********************************************

apiVersion: v1

kind: LimitRange

metadata:

name: webfrontend-limit

spec:

limits:

- max:

memory: 1Gi

min:

memory: 500Mi

type: Container

******************************************************

apply the same as below

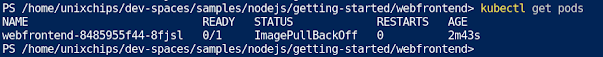

kubectl apply -f limitrange.yml more details are given in below link 7. Errors related to image's .. most of the cases when the image pulled is not correct we used to get the error as "ImagePullBackoff" while we giving the command "kubectl get pods"

This is due to issues with image names mismatch or image pull policy issues or even issue with credentials of a private registry

for example if we check the below pod status it is showing as errorimage pull & imahepullbackoff

if we check the logs it is showing as

PS /home/unixchips/dev-spaces/samples/nodejs/getting-started/webfrontend/webfrontend> kubectl logs webfrontend-8485955f44-8fjsl Error from server (BadRequest): container "webfrontend" in pod "webfrontend-8485955f44-8fjsl" is waiting to start: trying and failing to pullimage

if we check more details using kubectl describe pod webfrontend-8485955f44-8fjsl

Warning Failed 69m (x4 over 71m) kubelet Failed to pull image "unixchipsacr1.azurecr.io/webfronten:v1": [rpc error: code = NotFound desc = failed to pull and unpack image "unixchipsacr1.azurecr.io/webfronten:v1": failed to resolve reference "unixchipsacr1.azurecr.io/webfronten:v1": unixchipsacr1.azurecr.io/webfronten:v1: not found, rpc error: code = Unknown desc = failed to pull and unpack image "unixchipsacr1.azurecr.io/webfronten:v1": failed to resolve reference "unixchipsacr1.azurecr.io/webfronten:v1": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized]

Warning Failed 69m (x4 over 71m) kubelet Error: ErrImagePull

Normal BackOff 16m (x242 over 71m) kubelet Back-off pulling image "unixchipsacr1.azurecr.io/webfronten:v1"

Warning Failed 76s (x308 over 71m) kubelet Error: ImagePullBackOff

we can see that from the image name it is not correct and due to that pods are not coming up ..

so image file name is not correct as there is one "d" is missing in the webfrontend .. so thats couse the issue and we have to change the deployment.yml file

many more issues need to be highlighted in this chart and i will project the same in later blogs

thank you for the reading .. stay tuned ..

No comments:

Post a Comment