Here i am providing some common issues which we are facing in AKS clusters and the method of troubleshooting that .

1. In some cases we may need to login to the pods using "SSH" to collect the logs, troubleshooting purpose etc. Let's check how to configure that

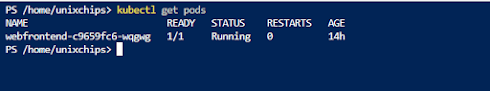

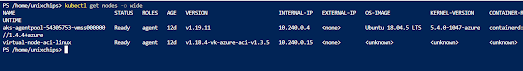

First create a SSH connection to the Linux node we have 1 pod is running in the cluster

To connect to the pod use the kubectl debug command to run the container image and connect

2. If we are getting the error as "quota exceeded error" during creation or upgrade we have to request for more vcpu's by creating a support request .

Code=OperationNotAllowed

Message=Operation results in exceeding quota limits of Core.

Maximum allowed: 4, Current in use: 4, Additional requested: 2.

select subscriptions

select the required subscriptions which we need to increase the quota

select usage + quotas

select request increase and corresponding metrics which we need to increase

3. Troubleshooting the cluster issues with AKS diagnostic tool

There is a good tool provided by Azure along with AKS to identify common cluster and network related issues in AKS. It is called as " Diagnose and solve problems" in the left side of the AKS configuration , there is two type of diagnose available for this .. cluster insights and networking

cluster diagnose is given below , we can check the each link to get more details about the diagnose process

We have other testing which is related to network perspective

Once we will click on each tab we will get more details on each network perspective

This is one of the best method to identify cluster & network related issues for AKS

4. Getting error while connecting to the Kube API server as " Error dialing backend TCP ..."

In this case we have to make sure as "aks-link" or "tunnel front" is working fine in the "kubectl get pods --namespace kube-system" command . If it is not working we may need to delete the pod and recreate it

5. When we are trying to upgrade or scale the cluster , getting the error as below

"Changing property (image reference) is not allowed

This error is due to modifying or deleting the tags in the agent nodes inside the AKS cluster . This is an unexpected error due to the changes in the AKS cluster properties

6. The next error used to get while scaling the cluster is as "cluster is in failed state and upgrading or scaling will not work until it is fixed"

This issue is due to lack of compute resources , so first we have to bring back the cluster with in the stable state quota, then create a service request to upgrade the quota .

7. Too many requests - 429 error's"

When a kubernetes cluster on Azure (AKS or no) does a frequent scale up/down or uses the cluster autoscaler (CA), those operations can result in a large number of HTTP calls that in turn exceed the assigned subscription quota leading to failure.Make sure you are running at least AKS 1.18.x , if not we may need to upgrade the latest version

No comments:

Post a Comment